Introduction

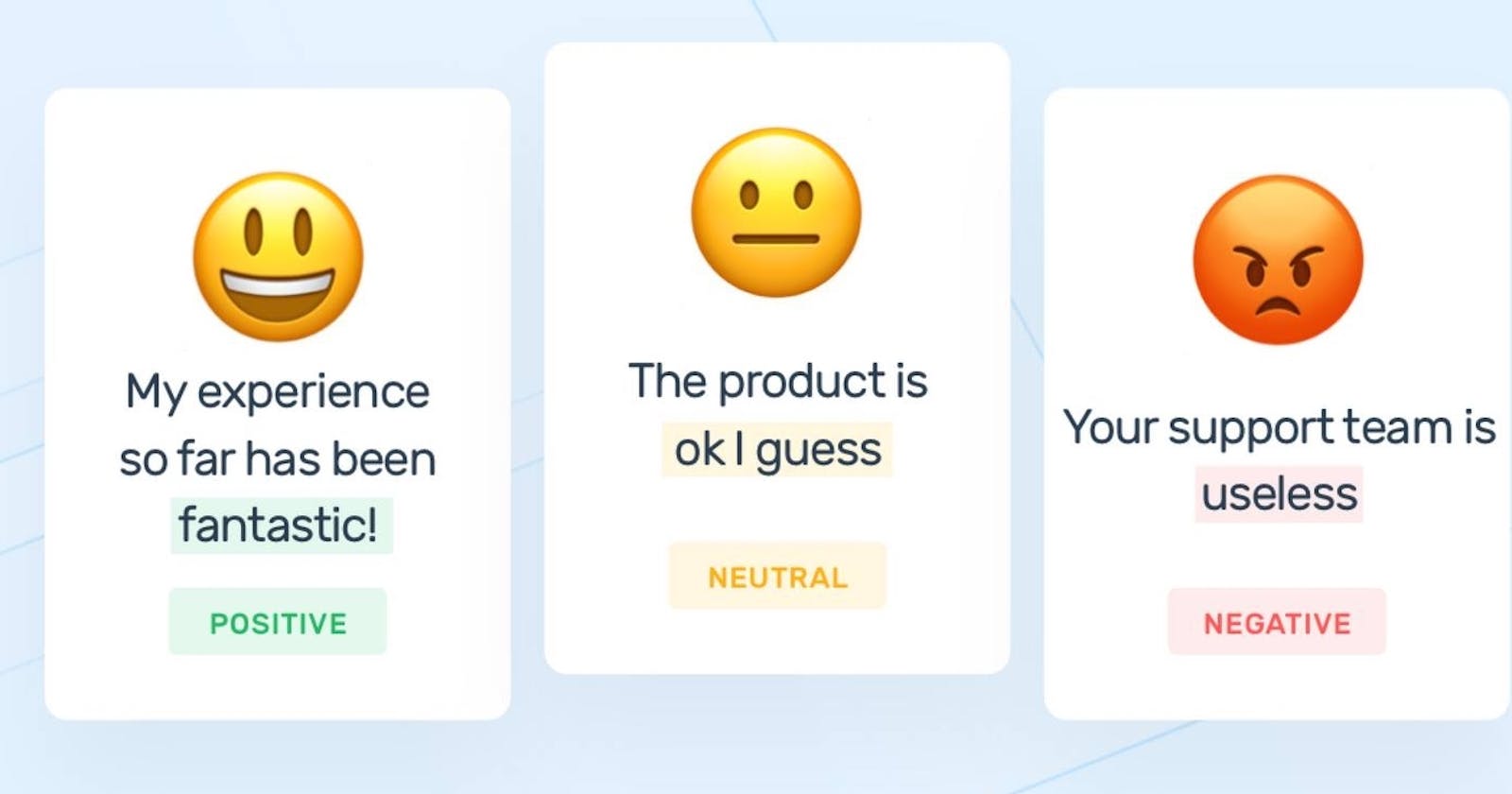

In this article, we'll demonstrate how to create a NestJS-based sentiment analysis application that uses natural language processing (NLP) to evaluate text data from user reviews and identify the user's sentiment(reaction).

Why do companies need sentiment analysis?

Offering clients what they genuinely want rather than what companies believe they need is what product managers need in order to build a strong product roadmap.

Sentiment analysis is the automated process that allows product managers to understand users' reviews of their products in an automated fashion.

The benefits of automated sentiment analysis are given below;

- Recognize the pros and cons that customers have with your product.

- Save hundreds of hours of tedious data processing and instead gain 24/7 access to the most recent product analytics automatically.

- Compare the testimonials for your products to those of your rivals'.

Sentiment analysis is done through natural language processing. which is a technology that processes a user's comments on a product in order to derive meaningful and actionable data from them.

Prerequisite

Setting up the application

To get started with setting up a Nest.js application, clone the starter repository i provided on my github account.

git clone https://github.com/r-scheele/nestjs-sentiment

Install all of the project's dependencies by changing directories into the newly formed folder.

cd nestjs-sentiment/ && npm install

Starting the application with;

npm run start:dev

Now that our application has been successfully set up, let's use NLP to implement the sentiment analysis functionality.

First, we'll set up Natural, a Node.js package that covers the majority of the NLP algorithms we'll be utilizing for our project. Let's try the following command on our terminal:

Controllers

Controllers manage incoming requests and provide the client with response, so it makes sense to start our implementation from here.

// app.controller.ts

import { Body, Controller, Get } from '@nestjs/common';

import { AppService } from './app.service';

import { CreateReviewDto } from './create-review.dto';

@Controller()

export class AppController {

constructor(private readonly appService: AppService) {}

@Get('analyze')

getAnalysis(@Body() analysis: CreateReviewDto) {

return this.appService.getAnalysis(analysis);

}

}

NestJS provides a @Body() decorator that gives us easy access to the request body, by introducing the concept of a Data Transfer Object (DTO), which is used to define the format of the data sent on the request, and the response. A DTO could be a class or a typescript interface.

// create-review.dto.ts

import { IsNotEmpty, IsString } from "class-validator";

export class CreateReviewDto {

@IsString()

readonly name: string;

@IsString()

@IsNotEmpty()

readonly review: string;

}

Class-validator allows the use of decorators to validate the structure and type of the properties on the request object.

Nestjs service

The job of a service is to separate the business logic from controllers, making it cleaner and more comfortable to test. With the service, we can analyse user reviews, process it and return the sentient analysis.

// app.service.ts

// @ts-ignore

import { Injectable } from '@nestjs/common';

import { CreateReviewDto } from './create-review.dto';

import aposToLexForm from 'apos-to-lex-form';

import { WordTokenizer } from 'natural';

import SpellCorrector from 'spelling-corrector';

import { removeStopwords } from 'stopword';

import natural from 'natural';

const spellCorrector = new SpellCorrector();

spellCorrector.loadDictionary();

@Injectable()

export class AppService {

analyzeReview(analysis: CreateReviewDto) {

// format review

const formattedReview: string = aposToLexForm(analysis.review)

.toLowerCase()

.replace(/[^a-zA-Z\s]+/g, '');

// Tokenization

const tokenizer = new WordTokenizer();

const tokens = tokenizer.tokenize(formattedReview);

// Correcting spelling errors

const fixedSpelling = tokens.map((word) => spellCorrector.correct(word));

// Removing stop words

const stopWordsRemoved = removeStopwords(fixedSpelling);

// Stemming

const { SentimentAnalyzer, PorterStemmer } = natural;

const analyzer = new SentimentAnalyzer('English', PorterStemmer, 'afinn');

// Sentiment analysis

const sentiment = analyzer.getSentiment(stopWordsRemoved);

return sentiment;

}

}

The analyzeReview() method has a couple of notable sections to be aware of;

User review processing

Since the raw data we receive from our user is frequently noisy and probably contains numerous errors, it must be transformed into a format that our NLP system can comprehend and use. We must translate contractions (such as I'm, you're, etc.) to their standard vocabulary in order to keep uniform structure in our text data (i.e., I am, you are, etc.). We use the previously installed package apos-to-lex-form to accomplish this. The text data is cleaned up( all non-alphabetical characters and symbols are removed), and converted to lowercase to ensure the sentiment algorithm treats good and GOOD as the same words.

Tokenization

This is the procedure for dividing a text into its various insightful parts. A sentence can be thought of as a token of a paragraph, and a word as a token of a sentence.

Correcting spelling mistakes

Typographical errors are likely because the product reviews will be manually authored by our consumers. Let's utilize the spelling-corrector package to fix typos before sending our data to our sentiment analysis algorithm. This way, if a user types lov accidentally, our algorithm will receive the correct spelling, love.

Removing stop words

The most frequent words in a language are typically stop words, which are eliminated before processing. Stop words contain phrases like but, a, or, and what. Eliminating these words will allow us to concentrate on the crucial keywords because they have no impact on a user's emotion.

Stemming

This is a word normalization procedure used in natural language processing (NLP) to return derived or inflected words to their base or root form. For instance, it is assumed that a stemmer algorithm will break down the words "giving," "gave," and "giver" into their basic constituent "give."

Sentiment analysis

As soon as the text data reaches the appropriate condition, we may analyze customer reviews using Natural's SentimentAnalyzer.

The Natural library's sentiment analysis system is based on a lexicon that gives words polarities. For instance, the polarity of the word "good" is 3, while the polarity of the word "evil" is -3. The algorithm calculates the sentiment by adding up the polarity of each word in a passage of text and normalizing with sentence length.

To get a more accurate result, preparing the data and eliminating all the noise was an essential step. If our algorithm returns a negative value, The statement/word is regarded negative; if it returns a positive value, it is considered positive; and if it returns zero, it is deemed neutral.

The SentimentAnalyzer constructor has three parameters:

- The language of the text data

- The stemmer

- The vocabulary (currently supports AFINN, Senticon, and Pattern) - we're using afinn in this example, because it's the simplest yet most popular lexicon used for sentiment analysis

The completed version of this project can be found in my github profile; clone the repository with

git clone -b project-end https://github.com/r-scheele/nestjs-sentiment.git